garage.envs.task_name_wrapper¶

Wrapper for adding an environment info to track task ID.

-

class

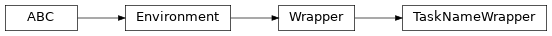

TaskNameWrapper(env, *, task_name=None, task_id=None)¶ Bases:

garage.Wrapper

Add task_name or task_id to env infos.

- Parameters

-

step(self, action)¶ gym.Env step for the active task env.

- Parameters

action (np.ndarray) – Action performed by the agent in the environment.

- Returns

np.ndarray: Agent’s observation of the current environment. float: Amount of reward yielded by previous action. bool: True iff the episode has ended. dict[str, np.ndarray]: Contains auxiliary diagnostic

information about this time-step.

- Return type

-

property

action_space(self)¶ akro.Space: The action space specification.

-

property

observation_space(self)¶ akro.Space: The observation space specification.

-

property

spec(self)¶ EnvSpec: The environment specification.

-

property

render_modes(self)¶ list: A list of string representing the supported render modes.

-

reset(self)¶ Reset the wrapped env.

- Returns

- The first observation conforming to

observation_space.

- dict: The episode-level information.

Note that this is not part of env_info provided in step(). It contains information of he entire episode, which could be needed to determine the first action (e.g. in the case of goal-conditioned or MTRL.)

- Return type

numpy.ndarray

-

render(self, mode)¶ Render the wrapped environment.

-

visualize(self)¶ Creates a visualization of the wrapped environment.

-

close(self)¶ Close the wrapped env.

-

property

unwrapped(self)¶ garage.Environment: The inner environment.