garage.tf.algos.rl2¶

Module for RL2.

This module contains RL2, RL2Worker and the environment wrapper for RL2.

-

class

RL2Env(env)¶ Bases:

garage.Wrapper

Environment wrapper for RL2.

In RL2, observation is concatenated with previous action, reward and terminal signal to form new observation.

- Parameters

env (Environment) – An env that will be wrapped.

-

property

observation_space(self)¶ akro.Space: The observation space specification.

-

property

spec(self)¶ EnvSpec: The environment specification.

-

reset(self)¶ Call reset on wrapped env.

- Returns

- The first observation conforming to

observation_space.

- dict: The episode-level information.

Note that this is not part of env_info provided in step(). It contains information of he entire episode, which could be needed to determine the first action (e.g. in the case of goal-conditioned or MTRL.)

- Return type

numpy.ndarray

-

step(self, action)¶ Call step on wrapped env.

- Parameters

action (np.ndarray) – An action provided by the agent.

- Returns

The environment step resulting from the action.

- Return type

- Raises

RuntimeError – if step() is called after the environment has been constructed and reset() has not been called.

-

property

action_space(self)¶ akro.Space: The action space specification.

-

property

render_modes(self)¶ list: A list of string representing the supported render modes.

-

render(self, mode)¶ Render the wrapped environment.

-

visualize(self)¶ Creates a visualization of the wrapped environment.

-

close(self)¶ Close the wrapped env.

-

property

unwrapped(self)¶ garage.Environment: The inner environment.

-

class

RL2Worker(*, seed, max_episode_length, worker_number, n_episodes_per_trial=2)¶ Bases:

garage.sampler.DefaultWorker

Initialize a worker for RL2.

In RL2, policy does not reset between epsiodes in each meta batch. Policy only resets once at the beginning of a trial/meta batch.

- Parameters

seed (int) – The seed to use to intialize random number generators.

max_episode_length (int or float) – The maximum length of episodes to sample. Can be (floating point) infinity.

worker_number (int) – The number of the worker where this update is occurring. This argument is used to set a different seed for each worker.

n_episodes_per_trial (int) – Number of episodes sampled per trial/meta-batch. Policy resets in the beginning of a meta batch, and obtain n_episodes_per_trial episodes in one meta batch.

-

env¶ The worker’s environment.

- Type

Environment or None

-

start_episode(self)¶ Begin a new episode.

-

rollout(self)¶ Sample a single episode of the agent in the environment.

- Returns

The collected episode.

- Return type

-

worker_init(self)¶ Initialize a worker.

-

update_agent(self, agent_update)¶ Update an agent, assuming it implements

Policy.- Parameters

agent_update (np.ndarray or dict or Policy) – If a tuple, dict, or np.ndarray, these should be parameters to agent, which should have been generated by calling Policy.get_param_values. Alternatively, a policy itself. Note that other implementations of Worker may take different types for this parameter.

-

update_env(self, env_update)¶ Use any non-None env_update as a new environment.

A simple env update function. If env_update is not None, it should be the complete new environment.

This allows changing environments by passing the new environment as env_update into obtain_samples.

- Parameters

env_update (Environment or EnvUpdate or None) – The environment to replace the existing env with. Note that other implementations of Worker may take different types for this parameter.

- Raises

TypeError – If env_update is not one of the documented types.

-

step_episode(self)¶ Take a single time-step in the current episode.

- Returns

True iff the episode is done, either due to the environment indicating termination of due to reaching max_episode_length.

- Return type

-

collect_episode(self)¶ Collect the current episode, clearing the internal buffer.

- Returns

- A batch of the episodes completed since the last call

to collect_episode().

- Return type

-

shutdown(self)¶ Close the worker’s environment.

-

class

NoResetPolicy(policy)¶ A policy that does not reset.

For RL2 meta-test, the policy should not reset after meta-RL adapation. The hidden state will be retained as it is where the adaptation takes place.

- Parameters

policy (garage.tf.policies.Policy) – Policy itself.

- Returns

The wrapped policy that does not reset.

- Return type

-

reset(self)¶ Environment reset function.

-

get_action(self, obs)¶ Get a single action from this policy for the input observation.

- Parameters

obs (numpy.ndarray) – Observation from environment.

- Returns

Predicted action dict: Agent into

- Return type

numpy.ndarray

-

get_param_values(self)¶ Return values of params.

- Returns

Policy parameters values.

- Return type

np.ndarray

-

set_param_values(self, params)¶ Set param values.

- Parameters

params (np.ndarray) – A numpy array of parameter values.

-

class

RL2AdaptedPolicy(policy)¶ A RL2 policy after adaptation.

- Parameters

policy (garage.tf.policies.Policy) – Policy itself.

-

reset(self)¶ Environment reset function.

-

get_action(self, obs)¶ Get a single action from this policy for the input observation.

- Parameters

obs (numpy.ndarray) – Observation from environment.

- Returns

Predicated action dict: Agent info.

- Return type

numpy.ndarray

-

get_param_values(self)¶ Return values of params.

- Returns

Policy parameter values np.ndarray: Initial hidden state, which will be set every time

the policy is used for meta-test.

- Return type

np.ndarray

-

set_param_values(self, params)¶ Set param values.

- Parameters

params (Tuple[np.ndarray, np.ndarray]) – Two numpy array of parameter values, one of the network parameters, one for the initial hidden state.

-

class

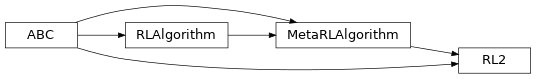

RL2(env_spec, episodes_per_trial, meta_batch_size, task_sampler, meta_evaluator, n_epochs_per_eval, **inner_algo_args)¶ Bases:

garage.np.algos.MetaRLAlgorithm,abc.ABC

RL^2.

Reference: https://arxiv.org/pdf/1611.02779.pdf.

When sampling for RL^2, there are more than one environments to be sampled from. In the original implementation, within each task/environment, all episodes sampled will be concatenated into one single episode, and fed to the inner algorithm. Thus, returns and advantages are calculated across the episode.

RL2Worker is required in sampling for RL2. See example/tf/rl2_ppo_halfcheetah.py for reference.

User should not instantiate RL2 directly. Currently garage supports PPO and TRPO as inner algorithm. Refer to garage/tf/algos/rl2ppo.py and garage/tf/algos/rl2trpo.py.

- Parameters

env_spec (EnvSpec) – Environment specification.

episodes_per_trial (int) – Used to calculate the max episode length for the inner algorithm.

meta_batch_size (int) – Meta batch size.

task_sampler (TaskSampler) – Task sampler.

meta_evaluator (MetaEvaluator) – Evaluator for meta-RL algorithms.

n_epochs_per_eval (int) – If meta_evaluator is passed, meta-evaluation will be performed every n_epochs_per_eval epochs.

inner_algo_args (dict) – Arguments for inner algorithm.

-

train(self, trainer)¶ Obtain samplers and start actual training for each epoch.

-

train_once(self, itr, episodes)¶ Perform one step of policy optimization given one batch of samples.

- Parameters

itr (int) – Iteration number.

episodes (EpisodeBatch) – Batch of episodes.

- Returns

Average return.

- Return type

numpy.float64

-

get_exploration_policy(self)¶ Return a policy used before adaptation to a specific task.

Each time it is retrieved, this policy should only be evaluated in one task.

- Returns

- The policy used to obtain samples that are later used for

meta-RL adaptation.

- Return type

-

adapt_policy(self, exploration_policy, exploration_episodes)¶ Produce a policy adapted for a task.

- Parameters

exploration_policy (Policy) – A policy which was returned from get_exploration_policy(), and which generated exploration_episodes by interacting with an environment. The caller may not use this object after passing it into this method.

exploration_episodes (EpisodeBatch) – episodes to adapt to, generated by exploration_policy exploring the environment.

- Returns

- A policy adapted to the task represented by the

exploration_episodes.

- Return type

-

property

policy(self)¶ Policy: Policy to be used.

-

property

max_episode_length(self)¶ int: Maximum length of an episode.