garage.tf.policies.discrete_qf_argmax_policy¶

A Discrete QFunction-derived policy.

This policy chooses the action that yields to the largest Q-value.

-

class

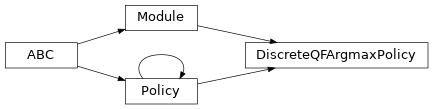

DiscreteQFArgmaxPolicy(env_spec, qf, name='DiscreteQFArgmaxPolicy')¶ Bases:

garage.tf.models.Module,garage.tf.policies.policy.Policy

DiscreteQFArgmax policy.

- Parameters

env_spec (garage.envs.env_spec.EnvSpec) – Environment specification.

qf (garage.q_functions.QFunction) – The q-function used.

name (str) – Name of the policy.

-

get_action(self, observation)¶ Get action from this policy for the input observation.

- Parameters

observation (numpy.ndarray) – Observation from environment.

- Returns

Single optimal action from this policy. dict: Predicted action and agent information. It returns an empty

dict since there is no parameterization.

- Return type

numpy.ndarray

-

get_actions(self, observations)¶ Get actions from this policy for the input observations.

- Parameters

observations (numpy.ndarray) – Observations from environment.

- Returns

Optimal actions from this policy. dict: Predicted action and agent information. It returns an empty

dict since there is no parameterization.

- Return type

numpy.ndarray

-

get_trainable_vars(self)¶ Get trainable variables.

- Returns

- A list of trainable variables in the current

variable scope.

- Return type

List[tf.Variable]

-

get_global_vars(self)¶ Get global variables.

- Returns

- A list of global variables in the current

variable scope.

- Return type

List[tf.Variable]

-

get_regularizable_vars(self)¶ Get all network weight variables in the current scope.

- Returns

- A list of network weight variables in the

current variable scope.

- Return type

List[tf.Variable]

-

get_params(self)¶ Get the trainable variables.

- Returns

- A list of trainable variables in the current

variable scope.

- Return type

List[tf.Variable]

-

get_param_shapes(self)¶ Get parameter shapes.

- Returns

A list of variable shapes.

- Return type

List[tuple]

-

get_param_values(self)¶ Get param values.

- Returns

- Values of the parameters evaluated in

the current session

- Return type

np.ndarray

-

set_param_values(self, param_values)¶ Set param values.

- Parameters

param_values (np.ndarray) – A numpy array of parameter values.

-

property

env_spec(self)¶ Policy environment specification.

- Returns

Environment specification.

- Return type

garage.EnvSpec

-

property

name(self)¶ str: Name of this module.

-

reset(self, do_resets=None)¶ Reset the module.

This is effective only to recurrent modules. do_resets is effective only to vectoried modules.

For a vectorized modules, do_resets is an array of boolean indicating which internal states to be reset. The length of do_resets should be equal to the length of inputs.

- Parameters

do_resets (numpy.ndarray) – Bool array indicating which states to be reset.

-

property

state_info_specs(self)¶ State info specification.

- Returns

- keys and shapes for the information related to the

module’s state when taking an action.

- Return type

List[str]

-

property

state_info_keys(self)¶ State info keys.

- Returns

- keys for the information related to the module’s state

when taking an input.

- Return type

List[str]

-

terminate(self)¶ Clean up operation.

-

flat_to_params(self, flattened_params)¶ Unflatten tensors according to their respective shapes.

- Parameters

flattened_params (np.ndarray) – A numpy array of flattened params.

- Returns

- A list of parameters reshaped to the

shapes specified.

- Return type

List[np.ndarray]

-

property

observation_space(self)¶ Observation space.

- Returns

The observation space of the environment.

- Return type

akro.Space

-

property

action_space(self)¶ Action space.

- Returns

The action space of the environment.

- Return type

akro.Space