garage.torch.policies¶

PyTorch Policies.

-

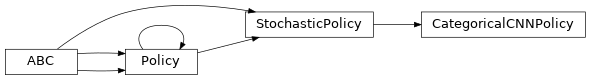

class

CategoricalCNNPolicy(env, kernel_sizes, hidden_channels, strides=1, hidden_sizes=(32, 32), hidden_nonlinearity=torch.tanh, hidden_w_init=nn.init.xavier_uniform_, hidden_b_init=nn.init.zeros_, paddings=0, padding_mode='zeros', max_pool=False, pool_shape=None, pool_stride=1, output_nonlinearity=None, output_w_init=nn.init.xavier_uniform_, output_b_init=nn.init.zeros_, layer_normalization=False, name='CategoricalCNNPolicy')¶ Bases:

garage.torch.policies.stochastic_policy.StochasticPolicy

CategoricalCNNPolicy.

A policy that contains a CNN and a MLP to make prediction based on a categorical distribution.

It only works with akro.Discrete action space.

- Parameters

env (garage.envs) – Environment.

kernel_sizes (tuple[int]) – Dimension of the conv filters. For example, (3, 5) means there are two convolutional layers. The filter for first layer is of dimension (3 x 3) and the second one is of dimension (5 x 5).

strides (tuple[int]) – The stride of the sliding window. For example, (1, 2) means there are two convolutional layers. The stride of the filter for first layer is 1 and that of the second layer is 2.

hidden_channels (tuple[int]) – Number of output channels for CNN. For example, (3, 32) means there are two convolutional layers. The filter for the first conv layer outputs 3 channels

hidden_sizes (list[int]) – Output dimension of dense layer(s) for the MLP for mean. For example, (32, 32) means the MLP consists of two hidden layers, each with 32 hidden units.

hidden_nonlinearity (callable) – Activation function for intermediate dense layer(s). It should return a torch.Tensor. Set it to None to maintain a linear activation.

hidden_w_init (callable) – Initializer function for the weight of intermediate dense layer(s). The function should return a torch.Tensor.

hidden_b_init (callable) – Initializer function for the bias of intermediate dense layer(s). The function should return a torch.Tensor.

paddings (tuple[int]) – Zero-padding added to both sides of the input

padding_mode (str) – The type of padding algorithm to use, either ‘SAME’ or ‘VALID’.

max_pool (bool) – Bool for using max-pooling or not.

pool_shape (tuple[int]) – Dimension of the pooling layer(s). For example, (2, 2) means that all the pooling layers have shape (2, 2).

pool_stride (tuple[int]) – The strides of the pooling layer(s). For example, (2, 2) means that all the pooling layers have strides (2, 2).

output_nonlinearity (callable) – Activation function for output dense layer. It should return a torch.Tensor. Set it to None to maintain a linear activation.

output_w_init (callable) – Initializer function for the weight of output dense layer(s). The function should return a torch.Tensor.

output_b_init (callable) – Initializer function for the bias of output dense layer(s). The function should return a torch.Tensor.

layer_normalization (bool) – Bool for using layer normalization or not.

name (str) – Name of policy.

-

forward(self, observations)¶ Compute the action distributions from the observations.

- Parameters

observations (torch.Tensor) – Batch of observations on default torch device.

- Returns

Batch distribution of actions. dict[str, torch.Tensor]: Additional agent_info, as torch Tensors.

Do not need to be detached, and can be on any device.

- Return type

torch.distributions.Distribution

-

get_action(self, observation)¶ Get a single action given an observation.

- Parameters

observation (np.ndarray) – Observation from the environment. Shape is \(env_spec.observation_space\).

- Returns

- np.ndarray: Predicted action. Shape is

\(env_spec.action_space\).

- dict:

np.ndarray[float]: Mean of the distribution

- np.ndarray[float]: Standard deviation of logarithmic

values of the distribution.

- Return type

-

get_actions(self, observations)¶ Get actions given observations.

- Parameters

observations (np.ndarray) – Observations from the environment. Shape is \(batch_dim \bullet env_spec.observation_space\).

- Returns

- np.ndarray: Predicted actions.

\(batch_dim \bullet env_spec.action_space\).

- dict:

np.ndarray[float]: Mean of the distribution.

- np.ndarray[float]: Standard deviation of logarithmic

values of the distribution.

- Return type

-

get_param_values(self)¶ Get the parameters to the policy.

This method is included to ensure consistency with TF policies.

- Returns

The parameters (in the form of the state dictionary).

- Return type

-

set_param_values(self, state_dict)¶ Set the parameters to the policy.

This method is included to ensure consistency with TF policies.

- Parameters

state_dict (dict) – State dictionary.

-

reset(self, do_resets=None)¶ Reset the policy.

This is effective only to recurrent policies.

do_resets is an array of boolean indicating which internal states to be reset. The length of do_resets should be equal to the length of inputs, i.e. batch size.

- Parameters

do_resets (numpy.ndarray) – Bool array indicating which states to be reset.

-

property

env_spec(self)¶ Policy environment specification.

- Returns

Environment specification.

- Return type

garage.EnvSpec

-

property

observation_space(self)¶ Observation space.

- Returns

The observation space of the environment.

- Return type

akro.Space

-

property

action_space(self)¶ Action space.

- Returns

The action space of the environment.

- Return type

akro.Space

-

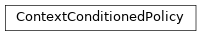

class

ContextConditionedPolicy(latent_dim, context_encoder, policy, use_information_bottleneck, use_next_obs)¶ Bases:

torch.nn.Module

A policy that outputs actions based on observation and latent context.

In PEARL, policies are conditioned on current state and a latent context (adaptation data) variable Z. This inference network estimates the posterior probability of z given past transitions. It uses context information stored in the encoder to infer the probabilistic value of z and samples from a policy conditioned on z.

- Parameters

latent_dim (int) – Latent context variable dimension.

context_encoder (garage.torch.embeddings.ContextEncoder) – Recurrent or permutation-invariant context encoder.

policy (garage.torch.policies.Policy) – Policy used to train the network.

use_information_bottleneck (bool) – True if latent context is not deterministic; false otherwise.

use_next_obs (bool) – True if next observation is used in context for distinguishing tasks; false otherwise.

-

reset_belief(self, num_tasks=1)¶ Reset \(q(z \| c)\) to the prior and sample a new z from the prior.

- Parameters

num_tasks (int) – Number of tasks.

-

sample_from_belief(self)¶ Sample z using distributions from current means and variances.

-

update_context(self, timestep)¶ Append single transition to the current context.

- Parameters

timestep (garage._dtypes.TimeStep) – Timestep containing transition information to be added to context.

-

infer_posterior(self, context)¶ Compute \(q(z \| c)\) as a function of input context and sample new z.

- Parameters

context (torch.Tensor) – Context values, with shape \((X, N, C)\). X is the number of tasks. N is batch size. C is the combined size of observation, action, reward, and next observation if next observation is used in context. Otherwise, C is the combined size of observation, action, and reward.

-

forward(self, obs, context)¶ Given observations and context, get actions and probs from policy.

- Parameters

obs (torch.Tensor) –

Observation values, with shape \((X, N, O)\). X is the number of tasks. N is batch size. O

is the size of the flattened observation space.

context (torch.Tensor) – Context values, with shape \((X, N, C)\). X is the number of tasks. N is batch size. C is the combined size of observation, action, reward, and next observation if next observation is used in context. Otherwise, C is the combined size of observation, action, and reward.

- Returns

- torch.Tensor: Predicted action values.

np.ndarray: Mean of distribution.

np.ndarray: Log std of distribution.

torch.Tensor: Log likelihood of distribution.

- torch.Tensor: Sampled values from distribution before

applying tanh transformation.

- torch.Tensor: z values, with shape \((N, L)\). N is batch size.

L is the latent dimension.

- Return type

-

get_action(self, obs)¶ Sample action from the policy, conditioned on the task embedding.

- Parameters

obs (torch.Tensor) – Observation values, with shape \((1, O)\). O is the size of the flattened observation space.

- Returns

- Output action value, with shape \((1, A)\).

A is the size of the flattened action space.

- dict:

np.ndarray[float]: Mean of the distribution.

- np.ndarray[float]: Standard deviation of logarithmic values

of the distribution.

- Return type

torch.Tensor

-

compute_kl_div(self)¶ Compute \(KL(q(z|c) \| p(z))\).

- Returns

\(KL(q(z|c) \| p(z))\).

- Return type

-

property

networks(self)¶ Return context_encoder and policy.

- Returns

Encoder and policy networks.

- Return type

-

property

context(self)¶ Return context.

- Returns

- Context values, with shape \((X, N, C)\).

X is the number of tasks. N is batch size. C is the combined size of observation, action, reward, and next observation if next observation is used in context. Otherwise, C is the combined size of observation, action, and reward.

- Return type

torch.Tensor

-

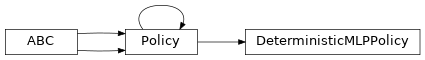

class

DeterministicMLPPolicy(env_spec, name='DeterministicMLPPolicy', **kwargs)¶ Bases:

garage.torch.policies.policy.Policy

Implements a deterministic policy network.

The policy network selects action based on the state of the environment. It uses a PyTorch neural network module to fit the function of pi(s).

-

forward(self, observations)¶ Compute actions from the observations.

- Parameters

observations (torch.Tensor) – Batch of observations on default torch device.

- Returns

Batch of actions.

- Return type

torch.Tensor

-

get_action(self, observation)¶ Get a single action given an observation.

- Parameters

observation (np.ndarray) – Observation from the environment.

- Returns

np.ndarray: Predicted action.

- dict:

np.ndarray[float]: Mean of the distribution

- np.ndarray[float]: Log of standard deviation of the

distribution

- Return type

-

get_actions(self, observations)¶ Get actions given observations.

- Parameters

observations (np.ndarray) – Observations from the environment.

- Returns

np.ndarray: Predicted actions.

- dict:

np.ndarray[float]: Mean of the distribution

- np.ndarray[float]: Log of standard deviation of the

distribution

- Return type

-

get_param_values(self)¶ Get the parameters to the policy.

This method is included to ensure consistency with TF policies.

- Returns

The parameters (in the form of the state dictionary).

- Return type

-

set_param_values(self, state_dict)¶ Set the parameters to the policy.

This method is included to ensure consistency with TF policies.

- Parameters

state_dict (dict) – State dictionary.

-

reset(self, do_resets=None)¶ Reset the policy.

This is effective only to recurrent policies.

do_resets is an array of boolean indicating which internal states to be reset. The length of do_resets should be equal to the length of inputs, i.e. batch size.

- Parameters

do_resets (numpy.ndarray) – Bool array indicating which states to be reset.

-

property

env_spec(self)¶ Policy environment specification.

- Returns

Environment specification.

- Return type

garage.EnvSpec

-

property

observation_space(self)¶ Observation space.

- Returns

The observation space of the environment.

- Return type

akro.Space

-

property

action_space(self)¶ Action space.

- Returns

The action space of the environment.

- Return type

akro.Space

-

-

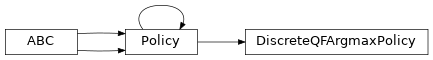

class

DiscreteQFArgmaxPolicy(qf, env_spec, name='DiscreteQFArgmaxPolicy')¶ Bases:

garage.torch.policies.policy.Policy

Policy that derives its actions from a learned Q function.

The action returned is the one that yields the highest Q value for a given state, as determined by the supplied Q function.

- Parameters

-

forward(self, observations)¶ Get actions corresponding to a batch of observations.

- Parameters

observations (torch.Tensor) – Batch of observations of shape \((N, O)\). Observations should be flattened even if they are images as the underlying Q network handles unflattening.

- Returns

Batch of actions of shape \((N, A)\)

- Return type

torch.Tensor

-

get_action(self, observation)¶ Get a single action given an observation.

- Parameters

observation (np.ndarray) – Observation with shape \((O, )\).

- Returns

Predicted action with shape \((A, )\). dict: Empty since this policy does not produce a distribution.

- Return type

torch.Tensor

-

get_actions(self, observations)¶ Get actions given observations.

- Parameters

observations (np.ndarray) – Batch of observations, should have shape \((N, O)\).

- Returns

Predicted actions. Tensor has shape \((N, A)\). dict: Empty since this policy does not produce a distribution.

- Return type

torch.Tensor

-

get_param_values(self)¶ Get the parameters to the policy.

This method is included to ensure consistency with TF policies.

- Returns

The parameters (in the form of the state dictionary).

- Return type

-

set_param_values(self, state_dict)¶ Set the parameters to the policy.

This method is included to ensure consistency with TF policies.

- Parameters

state_dict (dict) – State dictionary.

-

reset(self, do_resets=None)¶ Reset the policy.

This is effective only to recurrent policies.

do_resets is an array of boolean indicating which internal states to be reset. The length of do_resets should be equal to the length of inputs, i.e. batch size.

- Parameters

do_resets (numpy.ndarray) – Bool array indicating which states to be reset.

-

property

env_spec(self)¶ Policy environment specification.

- Returns

Environment specification.

- Return type

garage.EnvSpec

-

property

observation_space(self)¶ Observation space.

- Returns

The observation space of the environment.

- Return type

akro.Space

-

property

action_space(self)¶ Action space.

- Returns

The action space of the environment.

- Return type

akro.Space

-

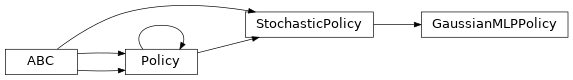

class

GaussianMLPPolicy(env_spec, hidden_sizes=(32, 32), hidden_nonlinearity=torch.tanh, hidden_w_init=nn.init.xavier_uniform_, hidden_b_init=nn.init.zeros_, output_nonlinearity=None, output_w_init=nn.init.xavier_uniform_, output_b_init=nn.init.zeros_, learn_std=True, init_std=1.0, min_std=1e-06, max_std=None, std_parameterization='exp', layer_normalization=False, name='GaussianMLPPolicy')¶ Bases:

garage.torch.policies.stochastic_policy.StochasticPolicy

MLP whose outputs are fed into a Normal distribution..

A policy that contains a MLP to make prediction based on a gaussian distribution.

- Parameters

env_spec (EnvSpec) – Environment specification.

hidden_sizes (list[int]) – Output dimension of dense layer(s) for the MLP for mean. For example, (32, 32) means the MLP consists of two hidden layers, each with 32 hidden units.

hidden_nonlinearity (callable) – Activation function for intermediate dense layer(s). It should return a torch.Tensor. Set it to None to maintain a linear activation.

hidden_w_init (callable) – Initializer function for the weight of intermediate dense layer(s). The function should return a torch.Tensor.

hidden_b_init (callable) – Initializer function for the bias of intermediate dense layer(s). The function should return a torch.Tensor.

output_nonlinearity (callable) – Activation function for output dense layer. It should return a torch.Tensor. Set it to None to maintain a linear activation.

output_w_init (callable) – Initializer function for the weight of output dense layer(s). The function should return a torch.Tensor.

output_b_init (callable) – Initializer function for the bias of output dense layer(s). The function should return a torch.Tensor.

learn_std (bool) – Is std trainable.

init_std (float) – Initial value for std. (plain value - not log or exponentiated).

min_std (float) – Minimum value for std.

max_std (float) – Maximum value for std.

std_parameterization (str) –

How the std should be parametrized. There are two options: - exp: the logarithm of the std will be stored, and applied a

exponential transformation

softplus: the std will be computed as log(1+exp(x))

layer_normalization (bool) – Bool for using layer normalization or not.

name (str) – Name of policy.

-

forward(self, observations)¶ Compute the action distributions from the observations.

- Parameters

observations (torch.Tensor) – Batch of observations on default torch device.

- Returns

Batch distribution of actions. dict[str, torch.Tensor]: Additional agent_info, as torch Tensors

- Return type

torch.distributions.Distribution

-

get_action(self, observation)¶ Get a single action given an observation.

- Parameters

observation (np.ndarray) – Observation from the environment. Shape is \(env_spec.observation_space\).

- Returns

- np.ndarray: Predicted action. Shape is

\(env_spec.action_space\).

- dict:

np.ndarray[float]: Mean of the distribution

- np.ndarray[float]: Standard deviation of logarithmic

values of the distribution.

- Return type

-

get_actions(self, observations)¶ Get actions given observations.

- Parameters

observations (np.ndarray) – Observations from the environment. Shape is \(batch_dim \bullet env_spec.observation_space\).

- Returns

- np.ndarray: Predicted actions.

\(batch_dim \bullet env_spec.action_space\).

- dict:

np.ndarray[float]: Mean of the distribution.

- np.ndarray[float]: Standard deviation of logarithmic

values of the distribution.

- Return type

-

get_param_values(self)¶ Get the parameters to the policy.

This method is included to ensure consistency with TF policies.

- Returns

The parameters (in the form of the state dictionary).

- Return type

-

set_param_values(self, state_dict)¶ Set the parameters to the policy.

This method is included to ensure consistency with TF policies.

- Parameters

state_dict (dict) – State dictionary.

-

reset(self, do_resets=None)¶ Reset the policy.

This is effective only to recurrent policies.

do_resets is an array of boolean indicating which internal states to be reset. The length of do_resets should be equal to the length of inputs, i.e. batch size.

- Parameters

do_resets (numpy.ndarray) – Bool array indicating which states to be reset.

-

property

env_spec(self)¶ Policy environment specification.

- Returns

Environment specification.

- Return type

garage.EnvSpec

-

property

observation_space(self)¶ Observation space.

- Returns

The observation space of the environment.

- Return type

akro.Space

-

property

action_space(self)¶ Action space.

- Returns

The action space of the environment.

- Return type

akro.Space

-

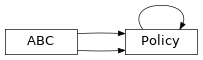

class

Policy(env_spec, name)¶ Bases:

torch.nn.Module,garage.np.policies.Policy,abc.ABC

Policy base class.

-

abstract

get_action(self, observation)¶ Get action sampled from the policy.

-

abstract

get_actions(self, observations)¶ Get actions given observations.

-

get_param_values(self)¶ Get the parameters to the policy.

This method is included to ensure consistency with TF policies.

- Returns

The parameters (in the form of the state dictionary).

- Return type

-

set_param_values(self, state_dict)¶ Set the parameters to the policy.

This method is included to ensure consistency with TF policies.

- Parameters

state_dict (dict) – State dictionary.

-

reset(self, do_resets=None)¶ Reset the policy.

This is effective only to recurrent policies.

do_resets is an array of boolean indicating which internal states to be reset. The length of do_resets should be equal to the length of inputs, i.e. batch size.

- Parameters

do_resets (numpy.ndarray) – Bool array indicating which states to be reset.

-

property

env_spec(self)¶ Policy environment specification.

- Returns

Environment specification.

- Return type

garage.EnvSpec

-

property

observation_space(self)¶ Observation space.

- Returns

The observation space of the environment.

- Return type

akro.Space

-

property

action_space(self)¶ Action space.

- Returns

The action space of the environment.

- Return type

akro.Space

-

abstract

-

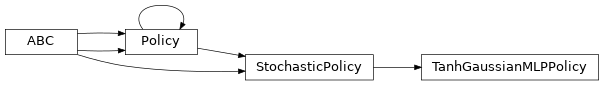

class

TanhGaussianMLPPolicy(env_spec, hidden_sizes=(32, 32), hidden_nonlinearity=nn.ReLU, hidden_w_init=nn.init.xavier_uniform_, hidden_b_init=nn.init.zeros_, output_nonlinearity=None, output_w_init=nn.init.xavier_uniform_, output_b_init=nn.init.zeros_, init_std=1.0, min_std=np.exp(- 20.0), max_std=np.exp(2.0), std_parameterization='exp', layer_normalization=False)¶ Bases:

garage.torch.policies.stochastic_policy.StochasticPolicy

Multiheaded MLP whose outputs are fed into a TanhNormal distribution.

A policy that contains a MLP to make prediction based on a gaussian distribution with a tanh transformation.

- Parameters

env_spec (EnvSpec) – Environment specification.

hidden_sizes (list[int]) – Output dimension of dense layer(s) for the MLP for mean. For example, (32, 32) means the MLP consists of two hidden layers, each with 32 hidden units.

hidden_nonlinearity (callable) – Activation function for intermediate dense layer(s). It should return a torch.Tensor. Set it to None to maintain a linear activation.

hidden_w_init (callable) – Initializer function for the weight of intermediate dense layer(s). The function should return a torch.Tensor.

hidden_b_init (callable) – Initializer function for the bias of intermediate dense layer(s). The function should return a torch.Tensor.

output_nonlinearity (callable) – Activation function for output dense layer. It should return a torch.Tensor. Set it to None to maintain a linear activation.

output_w_init (callable) – Initializer function for the weight of output dense layer(s). The function should return a torch.Tensor.

output_b_init (callable) – Initializer function for the bias of output dense layer(s). The function should return a torch.Tensor.

init_std (float) – Initial value for std. (plain value - not log or exponentiated).

min_std (float) – If not None, the std is at least the value of min_std, to avoid numerical issues (plain value - not log or exponentiated).

max_std (float) – If not None, the std is at most the value of max_std, to avoid numerical issues (plain value - not log or exponentiated).

std_parameterization (str) –

How the std should be parametrized. There are two options: - exp: the logarithm of the std will be stored, and applied a

exponential transformation

softplus: the std will be computed as log(1+exp(x))

layer_normalization (bool) – Bool for using layer normalization or not.

-

forward(self, observations)¶ Compute the action distributions from the observations.

- Parameters

observations (torch.Tensor) – Batch of observations on default torch device.

- Returns

Batch distribution of actions. dict[str, torch.Tensor]: Additional agent_info, as torch Tensors

- Return type

torch.distributions.Distribution

-

get_action(self, observation)¶ Get a single action given an observation.

- Parameters

observation (np.ndarray) – Observation from the environment. Shape is \(env_spec.observation_space\).

- Returns

- np.ndarray: Predicted action. Shape is

\(env_spec.action_space\).

- dict:

np.ndarray[float]: Mean of the distribution

- np.ndarray[float]: Standard deviation of logarithmic

values of the distribution.

- Return type

-

get_actions(self, observations)¶ Get actions given observations.

- Parameters

observations (np.ndarray) – Observations from the environment. Shape is \(batch_dim \bullet env_spec.observation_space\).

- Returns

- np.ndarray: Predicted actions.

\(batch_dim \bullet env_spec.action_space\).

- dict:

np.ndarray[float]: Mean of the distribution.

- np.ndarray[float]: Standard deviation of logarithmic

values of the distribution.

- Return type

-

get_param_values(self)¶ Get the parameters to the policy.

This method is included to ensure consistency with TF policies.

- Returns

The parameters (in the form of the state dictionary).

- Return type

-

set_param_values(self, state_dict)¶ Set the parameters to the policy.

This method is included to ensure consistency with TF policies.

- Parameters

state_dict (dict) – State dictionary.

-

reset(self, do_resets=None)¶ Reset the policy.

This is effective only to recurrent policies.

do_resets is an array of boolean indicating which internal states to be reset. The length of do_resets should be equal to the length of inputs, i.e. batch size.

- Parameters

do_resets (numpy.ndarray) – Bool array indicating which states to be reset.

-

property

env_spec(self)¶ Policy environment specification.

- Returns

Environment specification.

- Return type

garage.EnvSpec

-

property

observation_space(self)¶ Observation space.

- Returns

The observation space of the environment.

- Return type

akro.Space

-

property

action_space(self)¶ Action space.

- Returns

The action space of the environment.

- Return type

akro.Space