garage.torch¶

PyTorch-backed modules and algorithms.

-

compute_advantages(discount, gae_lambda, max_episode_length, baselines, rewards)¶ Calculate advantages.

Advantages are a discounted cumulative sum.

Calculate advantages using a baseline according to Generalized Advantage Estimation (GAE)

The discounted cumulative sum can be computed using conv2d with filter. filter:

[1, (discount * gae_lambda), (discount * gae_lambda) ^ 2, …] where the length is same with max_episode_length.- baselines and rewards are also has same shape.

baselines: [ [b_11, b_12, b_13, … b_1n],

[b_21, b_22, b_23, … b_2n], … [b_m1, b_m2, b_m3, … b_mn] ]rewards: [ [r_11, r_12, r_13, … r_1n],

[r_21, r_22, r_23, … r_2n], … [r_m1, r_m2, r_m3, … r_mn] ]

Parameters: - discount (float) – RL discount factor (i.e. gamma).

- gae_lambda (float) – Lambda, as used for Generalized Advantage Estimation (GAE).

- max_episode_length (int) – Maximum length of a single episode.

- baselines (torch.Tensor) – A 2D vector of value function estimates with shape (N, T), where N is the batch dimension (number of episodes) and T is the maximum episode length experienced by the agent. If an episode terminates in fewer than T time steps, the remaining elements in that episode should be set to 0.

- rewards (torch.Tensor) – A 2D vector of per-step rewards with shape (N, T), where N is the batch dimension (number of episodes) and T is the maximum episode length experienced by the agent. If an episode terminates in fewer than T time steps, the remaining elements in that episode should be set to 0.

Returns: - A 2D vector of calculated advantage values with shape

(N, T), where N is the batch dimension (number of episodes) and T is the maximum episode length experienced by the agent. If an episode terminates in fewer than T time steps, the remaining values in that episode should be set to 0.

Return type: torch.Tensor

-

dict_np_to_torch(array_dict)¶ Convert a dict whose values are numpy arrays to PyTorch tensors.

Modifies array_dict in place.

Parameters: array_dict (dict) – Dictionary of data in numpy arrays Returns: Dictionary of data in PyTorch tensors Return type: dict

-

filter_valids(tensor, valids)¶ Filter out tensor using valids (last index of valid tensors).

valids contains last indices of each rows.

Parameters: Returns: Filtered Tensor

Return type: torch.Tensor

-

flatten_batch(tensor)¶ Flatten a batch of observations.

Reshape a tensor of size (X, Y, Z) into (X*Y, Z)

Parameters: tensor (torch.Tensor) – Tensor to flatten. Returns: Flattened tensor. Return type: torch.Tensor

-

flatten_to_single_vector(tensor)¶ Collapse the C x H x W values per representation into a single long vector.

Reshape a tensor of size (N, C, H, W) into (N, C * H * W).

Parameters: tensor (torch.tensor) – batch of data. Returns: Reshaped view of that data (analogous to numpy.reshape) Return type: torch.Tensor

-

global_device()¶ Returns the global device that torch.Tensors should be placed on.

- Note: The global device is set by using the function

- garage.torch._functions.set_gpu_mode. If this functions is never called garage.torch._functions.device() returns None.

Returns: - The global device that newly created torch.Tensors

- should be placed on.

Return type: torch.Device

-

class

NonLinearity(non_linear)¶ Bases:

torch.nn.Module

Wrapper class for non linear function or module.

Parameters: non_linear (callable or type) – Non-linear function or type to be wrapped. -

forward(self, input_value)¶ Forward method.

Parameters: input_value (torch.Tensor) – Input values Returns: Output value Return type: torch.Tensor

-

-

np_to_torch(array)¶ Numpy arrays to PyTorch tensors.

Parameters: array (np.ndarray) – Data in numpy array. Returns: float tensor on the global device. Return type: torch.Tensor

-

pad_to_last(nums, total_length, axis=-1, val=0)¶ Pad val to last in nums in given axis.

length of the result in given axis should be total_length.

Raises: IndexError– If the input axis value is out of range of the nums arrayParameters: Returns: Padded array

Return type: torch.Tensor

-

product_of_gaussians(mus, sigmas_squared)¶ Compute mu, sigma of product of gaussians.

Parameters: - mus (torch.Tensor) – Means, with shape \((N, M)\). M is the number of mean values.

- sigmas_squared (torch.Tensor) – Variances, with shape \((N, V)\). V is the number of variance values.

Returns: Mu of product of gaussians, with shape \((N, 1)\). torch.Tensor: Sigma of product of gaussians, with shape \((N, 1)\).

Return type: torch.Tensor

-

set_gpu_mode(mode, gpu_id=0)¶ Set GPU mode and device ID.

Parameters:

-

torch_to_np(tensors)¶ Convert PyTorch tensors to numpy arrays.

Parameters: tensors (tuple) – Tuple of data in PyTorch tensors. Returns: Tuple of data in numpy arrays. Return type: tuple[numpy.ndarray] - Note: This method is deprecated and now replaced by

- garage.torch._functions.to_numpy.

-

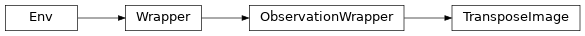

class

TransposeImage(env=None)¶ Bases:

gym.ObservationWrapper

Transpose observation space for image observation in PyTorch.

-

spec¶

-

unwrapped¶ Completely unwrap this env.

Returns: The base non-wrapped gym.Env instance Return type: gym.Env

-

metadata¶

-

reward_range¶

-

action_space¶

-

observation_space¶

-

observation(self, observation)¶ Transpose image observation.

Parameters: observation (tensor) – observation. Returns: transposed observation. Return type: torch.Tensor

-

reset(self, **kwargs)¶ Resets the state of the environment and returns an initial observation.

Returns: the initial observation. Return type: observation (object)

-

step(self, action)¶ Run one timestep of the environment’s dynamics. When end of episode is reached, you are responsible for calling reset() to reset this environment’s state.

Accepts an action and returns a tuple (observation, reward, done, info).

Parameters: action (object) – an action provided by the agent Returns: agent’s observation of the current environment reward (float) : amount of reward returned after previous action done (bool): whether the episode has ended, in which case further step() calls will return undefined results info (dict): contains auxiliary diagnostic information (helpful for debugging, and sometimes learning) Return type: observation (object)

-

classmethod

class_name(cls)¶

-

render(self, mode='human', **kwargs)¶ Renders the environment.

The set of supported modes varies per environment. (And some environments do not support rendering at all.) By convention, if mode is:

- human: render to the current display or terminal and return nothing. Usually for human consumption.

- rgb_array: Return an numpy.ndarray with shape (x, y, 3), representing RGB values for an x-by-y pixel image, suitable for turning into a video.

- ansi: Return a string (str) or StringIO.StringIO containing a terminal-style text representation. The text can include newlines and ANSI escape sequences (e.g. for colors).

Note

- Make sure that your class’s metadata ‘render.modes’ key includes

- the list of supported modes. It’s recommended to call super() in implementations to use the functionality of this method.

Parameters: mode (str) – the mode to render with Example:

- class MyEnv(Env):

metadata = {‘render.modes’: [‘human’, ‘rgb_array’]}

- def render(self, mode=’human’):

- if mode == ‘rgb_array’:

- return np.array(…) # return RGB frame suitable for video

- elif mode == ‘human’:

- … # pop up a window and render

- else:

- super(MyEnv, self).render(mode=mode) # just raise an exception

-

close(self)¶ Override close in your subclass to perform any necessary cleanup.

Environments will automatically close() themselves when garbage collected or when the program exits.

-

seed(self, seed=None)¶ Sets the seed for this env’s random number generator(s).

Note

Some environments use multiple pseudorandom number generators. We want to capture all such seeds used in order to ensure that there aren’t accidental correlations between multiple generators.

Returns: - Returns the list of seeds used in this env’s random

- number generators. The first value in the list should be the “main” seed, or the value which a reproducer should pass to ‘seed’. Often, the main seed equals the provided ‘seed’, but this won’t be true if seed=None, for example.

Return type: list<bigint>

-

compute_reward(self, achieved_goal, desired_goal, info)¶

-

-

update_module_params(module, new_params)¶ Load parameters to a module.

This function acts like torch.nn.Module._load_from_state_dict(), but it replaces the tensors in module with those in new_params, while _load_from_state_dict() loads only the value. Use this function so that the grad and grad_fn of new_params can be restored

Parameters: - module (torch.nn.Module) – A torch module.

- new_params (dict) – A dict of torch tensor used as the new parameters of this module. This parameters dict should be generated by torch.nn.Module.named_parameters()