garage.np.algos.meta_rl_algorithm¶

Interface of Meta-RL ALgorithms.

-

class

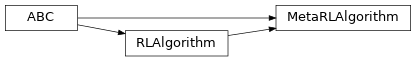

MetaRLAlgorithm¶ Bases:

garage.np.algos.rl_algorithm.RLAlgorithm,abc.ABC

Base class for Meta-RL Algorithms.

-

abstract

get_exploration_policy(self)¶ Return a policy used before adaptation to a specific task.

Each time it is retrieved, this policy should only be evaluated in one task.

- Returns

- The policy used to obtain samples, which are later used for

meta-RL adaptation.

- Return type

-

abstract

adapt_policy(self, exploration_policy, exploration_episodes)¶ Produce a policy adapted for a task.

- Parameters

exploration_policy (Policy) – A policy which was returned from get_exploration_policy(), and which generated exploration_trajectories by interacting with an environment. The caller may not use this object after passing it into this method.

exploration_episodes (EpisodeBatch) – Episodes with which to adapt. These are generated by exploration_policy while exploring the environment.

- Returns

- A policy adapted to the task represented by the

exploration_episodes.

- Return type

-

abstract