garage.np.algos¶

Reinforcement learning algorithms which use NumPy as a numerical backend.

- class CEM(env_spec, policy, sampler, n_samples, discount=0.99, init_std=1, best_frac=0.05, extra_std=1.0, extra_decay_time=100)¶

Bases:

garage.np.algos.rl_algorithm.RLAlgorithm

Cross Entropy Method.

CEM works by iteratively optimizing a gaussian distribution of policy.

In each epoch, CEM does the following: 1. Sample n_samples policies from a gaussian distribution of

mean cur_mean and std cur_std.

Collect episodes for each policy.

Update cur_mean and cur_std by doing Maximum Likelihood Estimation over the n_best top policies in terms of return.

- Parameters

env_spec (EnvSpec) – Environment specification.

policy (garage.np.policies.Policy) – Action policy.

sampler (garage.sampler.Sampler) – Sampler.

n_samples (int) – Number of policies sampled in one epoch.

discount (float) – Environment reward discount.

best_frac (float) – The best fraction.

init_std (float) – Initial std for policy param distribution.

extra_std (float) – Decaying std added to param distribution.

extra_decay_time (float) – Epochs that it takes to decay extra std.

- class CMAES(env_spec, policy, sampler, n_samples, discount=0.99, sigma0=1.0)¶

Bases:

garage.np.algos.rl_algorithm.RLAlgorithm

Covariance Matrix Adaptation Evolution Strategy.

Note

The CMA-ES method can hardly learn a successful policy even for simple task. It is still maintained here only for consistency with original rllab paper.

- Parameters

env_spec (EnvSpec) – Environment specification.

policy (garage.np.policies.Policy) – Action policy.

sampler (garage.sampler.Sampler) – Sampler.

n_samples (int) – Number of policies sampled in one epoch.

discount (float) – Environment reward discount.

sigma0 (float) – Initial std for param distribution.

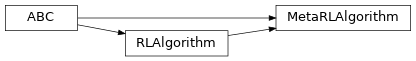

- class MetaRLAlgorithm¶

Bases:

garage.np.algos.rl_algorithm.RLAlgorithm,abc.ABC

Base class for Meta-RL Algorithms.

- abstract get_exploration_policy()¶

Return a policy used before adaptation to a specific task.

Each time it is retrieved, this policy should only be evaluated in one task.

- Returns

- The policy used to obtain samples, which are later used for

meta-RL adaptation.

- Return type

- abstract adapt_policy(exploration_policy, exploration_episodes)¶

Produce a policy adapted for a task.

- Parameters

exploration_policy (Policy) – A policy which was returned from get_exploration_policy(), and which generated exploration_trajectories by interacting with an environment. The caller may not use this object after passing it into this method.

exploration_episodes (EpisodeBatch) – Episodes with which to adapt. These are generated by exploration_policy while exploring the environment.

- Returns

- A policy adapted to the task represented by the

exploration_episodes.

- Return type

- class NOP¶

Bases:

garage.np.algos.rl_algorithm.RLAlgorithm

NOP (no optimization performed) policy search algorithm.

- init_opt()¶

Initialize the optimization procedure.

- optimize_policy(paths)¶

Optimize the policy using the samples.